rise of Conversational models based on artificial intelligence (AI) It raised various questions about its capabilities and limitations, and one of the most discussed points was that of Interpretation and, ultimately, imitation of human feelings. With this in mind, one technology company has developed a model that specifically focuses on reading the emotions of its users.

Hume AI, the company behind the chatbot Empathic audio interface (EVI)defines this tool as “The first emotionally intelligent artificial intelligence.” What they have created works like a chatbot, which has already become popular since ChatGPT has become an application of daily use for millions of people, with the peculiarity that – according to its developers – it is able to identify and examine the emotions of those who use it to give personalized responses.

“Based on more than 10 years of research, Our models instantly capture the nuances of expressions in audio, video and images. “Uncomfortable laughter, sighs of relief, nostalgic looks and more,” the Hume team explains on their official website.

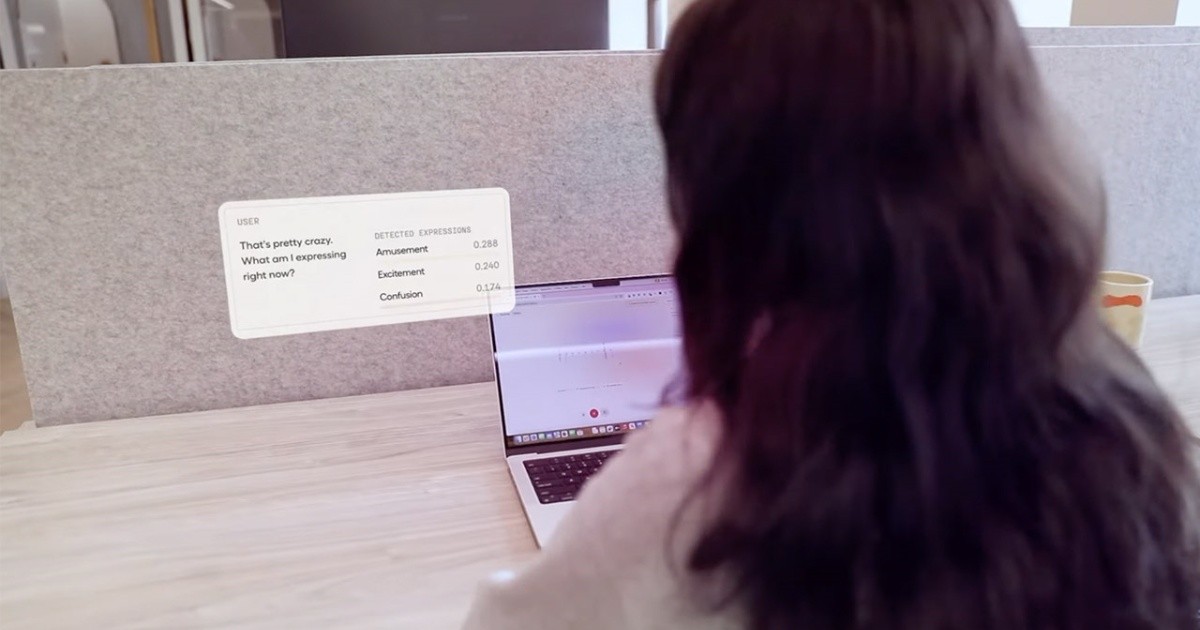

The tool listens to what the user says, transcribes, and then… Returns a response listing the registered emotions, with additional details about each. In addition to recording whether the user is sad, anxious, excited, sad, excited or skeptical, the AI model expresses itself as “empathy.”

According to the site, EVI can record over 24 different emotional expressions to adapt to your conversation with humansbased on “different dimensions of expression in vocal intonations, speech prosody, facial expressions” and other parameters.

The model is trained using data from millions of human conversations from around the world to capture human tone, reflections and emotions, and responses are further optimized in real-time based on the user's emotional state.

According to official information, developers can use EVI as an interface to any application through its Application Programming Interface (API). As for Access to the general publicThe official website indicates that it will be activated in April 2024.

How Hume's AI detects and processes emotions

- Uninterrupted: He stops talking when he is interrupted and starts listening, like a human.

- Respond to statements: Understands the natural rises and falls in tone and those used to convey meaning behind words.

- Expressive text-to-speech conversion: Generates the appropriate tone of voice to respond with natural and expressive speech.

- Aligned with your application: Learn from users' feedback to improve themselves and achieve happiness and satisfaction.

- Poisonous speech: Hume AI identifies toxic speech from online players with an error rate of 36%.

- Aligned with well-being: The AI is trained on human reactions to enhance positive expressions such as happiness and contentment.

:quality(85)/cloudfront-us-east-1.images.arcpublishing.com/infobae/3LRKVLCOWVTPYXD5YYZFLDV56I.jpg)

:quality(85)/cloudfront-us-east-1.images.arcpublishing.com/infobae/R5RWKUAYSFG4VAUIS5YRGKTUSY.jpg)

:quality(85)/cloudfront-us-east-1.images.arcpublishing.com/infobae/LHYHXVEQ2FEV7LIZQ7572GF7GU.jpg)